CES 2019: FinchShift goes 6DOF with their new controllers

My first meeting for CES 2019 was with Finch and their new 6DOF setup, the FinchShift controller. We're talking a 6DOF solution for stand alone headsets and even PC HMDs as well.

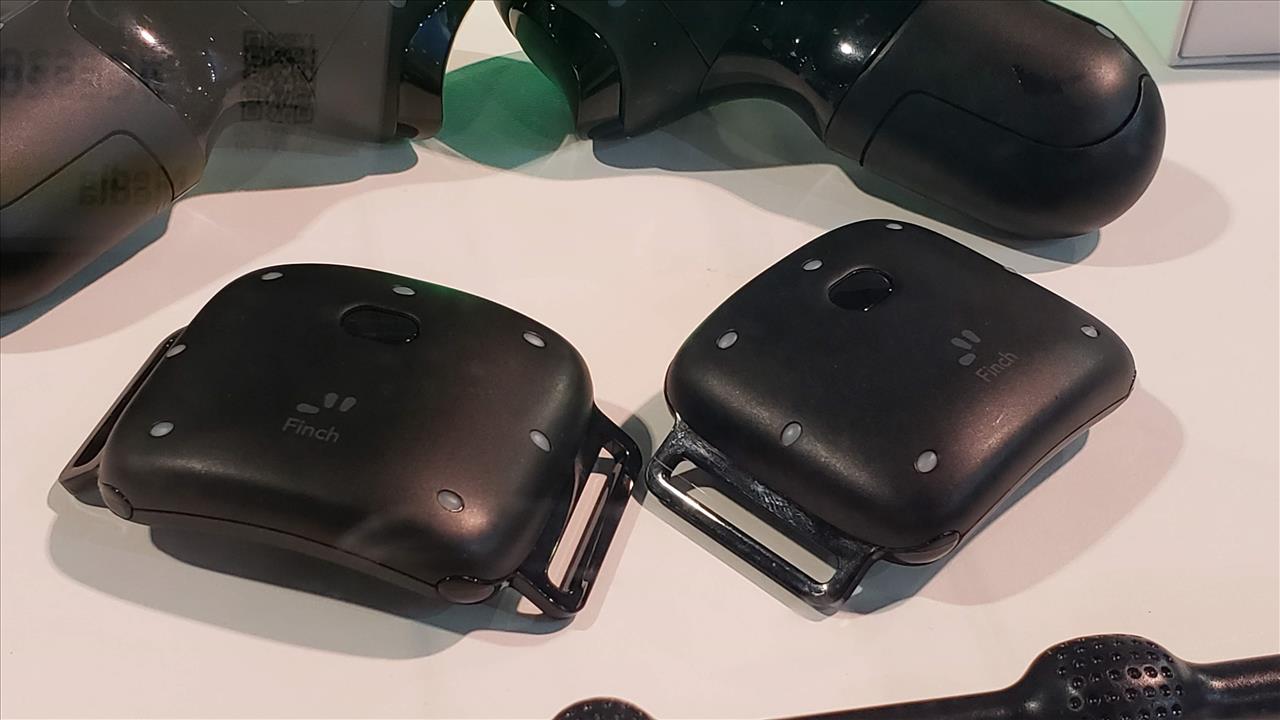

The FinchShift setup consists of two IMUs that you will wear just above the elbow and two small controllers that for the demo, feature a touchpad, trigger, grip buttons, home button, and an app button. There's also a variation that feature a joystick as well as gamers tend to lean towards that control scheme over a touchpad.

These IMUs and the software that Finch has developed are the special sauce that allow for Finch to calculate where the controller is when you move them around. No longer do you need to keep a line of sight like when you use Windows Mixed Reality controllers and you feel like you have total control.

Calibration was simple as all you had to do was shake your right arm and then line the controllers up in front of you matching the overlay that you see. You'll have to do this each time you start up an HMD, but they want to make sure you zero out any discrepancies before starting to use the controllers.

I participated in two demos, one where I held a sword and shield and hacked and slashed the enemies presented to me. The other was a more simplified demo that had you blocking a soccer ball kicked your way. Let's start with the sword demo.

I did as much as I can to get the controllers to be confused such as occluding them and looking away. I was surprised at how well the FinchShift controllers responded and kept their tracking even at extreme levels of movements. I looked at my sword and held it up high, looked away, moved it to my lower part of my body behind me, rotated my arm, looked back, and saw the sword right where I thought it would be. Finch's level of tracking in both movement, rotation, and distance was spot on. I was able to get the sword and shield to jitter during slow movements, but I attributed that to the pre-release demo that was being used and perhaps the lack of optimization. The reason I say this is because the soccer demo showed none of the jitter that this demo did.

For the soccer demo, I was able to use either hand to block the ball or swat it away, even when looking away. The software kept track of my motion and I was told that the medical field was really interested in this technology to gauge how a patient is doing in their rehab or even see where their problems lie in their range of motion. With the software being able to record all the motion that the person does from position, rotation, and range of movement, a doctor or physical therapist could recommend exercises to help with the patient's problems derived from the data that's available from the IMUs. It's a pretty exciting option for this technology and one that could benefit a lot of people.

HTC is going to include support for the FinchShift controllers in their upcoming SDK so that the technology will be available for all developers to use. Qualcomm is also going to have native support with their Snapdragon 845 processor found in a few high end phones today. Here's hoping many developers incorporate this into their software because I'd love for a way to use these controllers in say an Oculus GearVR or even a Windows Mixed Reality headset where your controllers tend to go 3DOF when out of sight from the cameras.

The setup of two IMUs, which by the way will last 18 hours before needing the AAA batteries changed, and two controllers are now available for pre-order in a dev kit including the SDK for $249, but Finch is looking to bring it down to around the $100 or so when consumer units hit the market. That is good to hear considering other companies are charging a high premium on their VR peripherals.

I was highly impressed by what I was able to experience with the FinchShift controllers and with their aggressive pricing strategy, here's hoping they can bring this kind of tech to the mass market and we see some good heavy adoption by developers as well.