while True: learn()

While there is certainly no shortage of various flavors of programming games, it is beginning to be more difficult to create something along those lines that hasn’t been done before. As such, it is no longer enough just to churn out something that provides an unadulterated programming game; these days, there needs to either be some kind of twist to the programming aspects, or additional aspects that mirror some part of a programmer’s professional life. Consider SHENZHEN I/O. While there are aspects of the programming that are unique to the genre, the reality of an engineer’s life is modeled by the providing of spec sheets for the components being used. If you have ever ordered an IC chip or higher functioning board, you will know the joys inherent in trying to discern how to use it from the often poorly written descriptions in the spec sheets.

In a similar vein ‘while true: learn()’ (hereafter referred to as ‘wt:l’ for easier readability) enhances an already unique programming style with other contemporaneous challenges such as budgetary, accuracy, and performance requirements, along with the need to pay for cloud server time. You are also provided opportunities to create startup companies based on the technology you have developed. As a recently retired IT Director/Deveoper, I can tell you that things like that take every bit as much time and effort as the actual programming does. Budgeting in particular has become ever more difficult with the advent of rented, cloud-based servers. While it would be relatively easy to forecast costs for an owned or rented server, it has become far more difficult to determine in environments such as Microsoft Azure where pricing can be influenced by processor usage. As an incentive to write tight code, that model works well. When trying to convince the CFO that we could afford it, not so much.

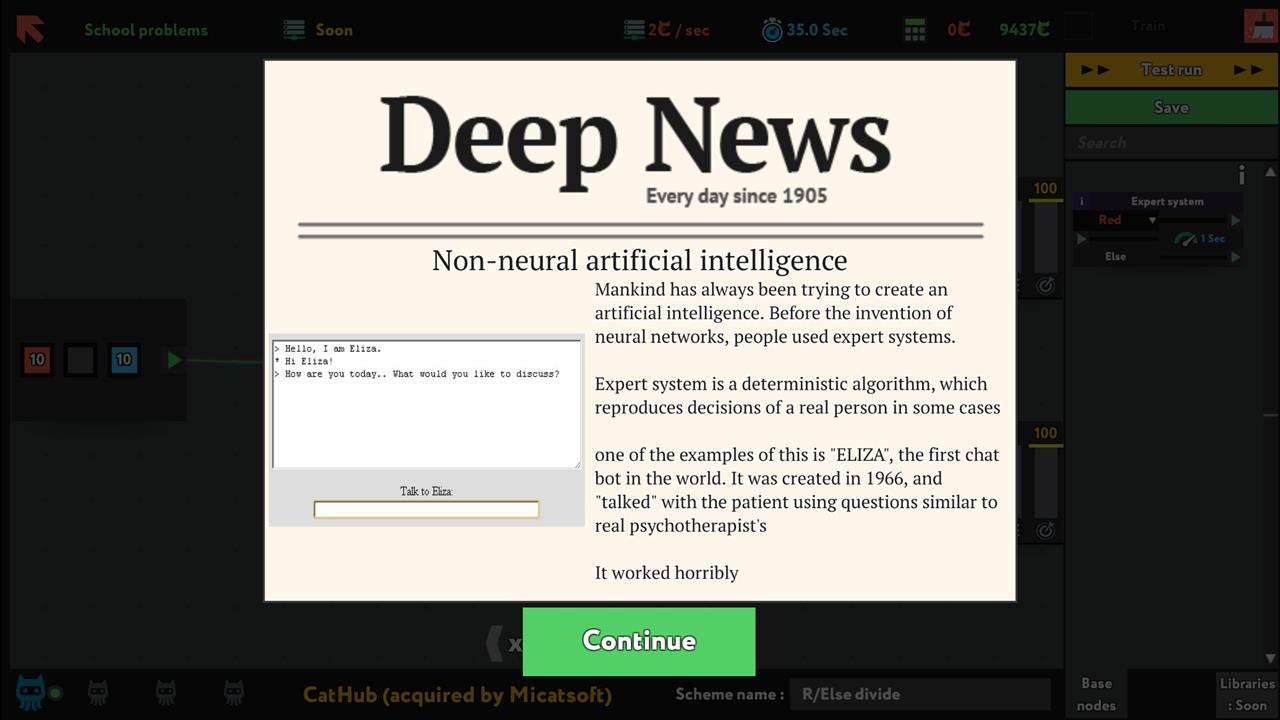

Another wrinkle added by wt:l is the subject of Machine Learning, which according to Wikipedia is “...a subset of artificial intelligence in the field of computer science that often uses statistical techniques to give computers the ability to "learn" (i.e., progressively improve performance on a specific task) with data, without being explicitly programmed.” What this equates to in wt:l is a subset of components that perform poorly when first added to a program, but through repeated test cycles can self-improve to a much more accurate, yet not quite one hundred percent, performance level. They tend to be relatively inaccurate, but also relatively fast.

That trade-off between being fast or correct is the very essence of the programming aspect of wt:l. You might get a contract that stipulates that high accuracy is valued over performance one day, and the next day get a contract that doesn’t mind a bit of sloppiness as long as it is fast, fast, FAST. In the early levels, addressing these differences is straightforward and easily accomplished. In later levels, you will encounter contracts that insist on both speed and accuracy. On a personal note, those are the levels that left me no recourse but to randomly try various mixes of fast components and accurate components with the same fervor as a blind squirrel hunting for nuts. At some point, that methodology could no longer make up for the weakness of my brain. Parallel processing is eventually introduced as well, and that is way beyond the point where my penchant for linear thinking can take me.

The process in wt:l is to receive an offer for a contract to solve some sort of business problem for the prospective customer. The reasons provided have very little relation to what you will eventually develop as a solution, so they are really nothing more than window dressing, but they do contribute to at least some level of a business ambiance. Any IT contractor can tell you that the first user stories are typically poorly-research fiction.

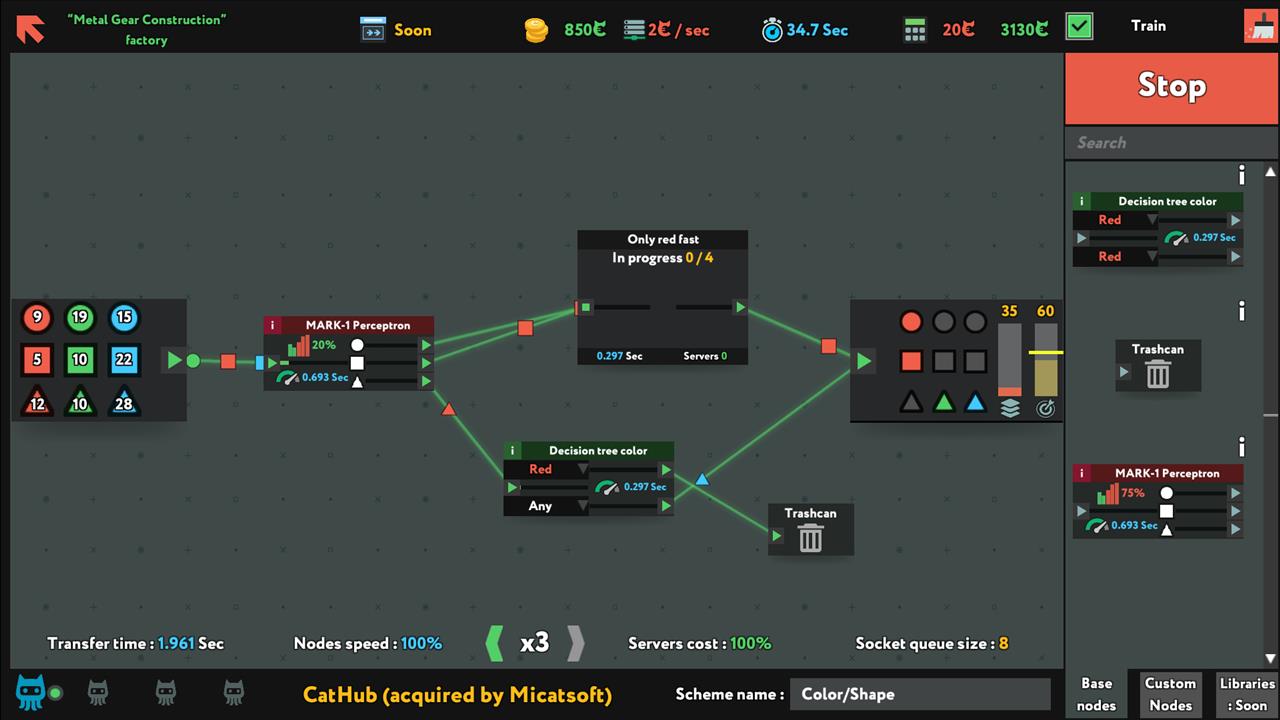

What you actually solve for is a program that will take a collection of colors and/or shapes from the data input stream and guide them to the correct output stream as defined in the output requirements. As stated before, you will have to do this with a collection of fast OR accurate components, and you will have to meet both performance and accuracy constraints. You do this by selecting from either basic nodes, which are provided by the game, or custom nodes, which are packaged components made up from your results from previous contacts.

The design is created with drag & drop components. These components are then connected to each other by wires between components, the input stream, and the output streams. Dragging the wires is easy, but the pathing on the design board is straight lines only. This can (and does) lead to a difficult to read mess of spaghetti on the design surface as wires cross each other or get hidden behind the components, which is unfortunate. Wire pathing (as I understand from an interview with a developer of a similar game) is extremely difficult, though, and as it does not severely impact the game experience, it probably wouldn’t be worth the effort. It sure would help aesthetically, though.

Once the design is complete enough for rudimentary testing, a test run is performed. In the test run, the data flows through the design and simulates the performance that will be seen once run on the server. Testing is free, but running on the server costs money. Money is important in this game because it can be used to buy access to higher performance servers, something commonly referred to “throwing money at a bad design.” But hey, deadlines are deadlines and the company has plenty of dough - do what it takes!

There are also components that start out stupid but can be trained to be better, although they are not likely to reach 100% accuracy. Training is done by selecting the ‘Train’ checkbox and running repeated tests. My experience demonstrated training results that took a component that was wrong 66% of the time down to being wrong 20% of the time. While not perfect, that 20% often came in handy when trying to limit the number of performance-sucking components. When challenged with a case that needs, for example, a lot of red inputs in one area but just a few in another, it often was enough just to count on the 20% error rate to guide some data to the output box that only needed a handful of the data. I’m not sure one would use that approach in critical cases like spaceships and Lasik machines, but for the mundane day-to-day stuff it seems okay.

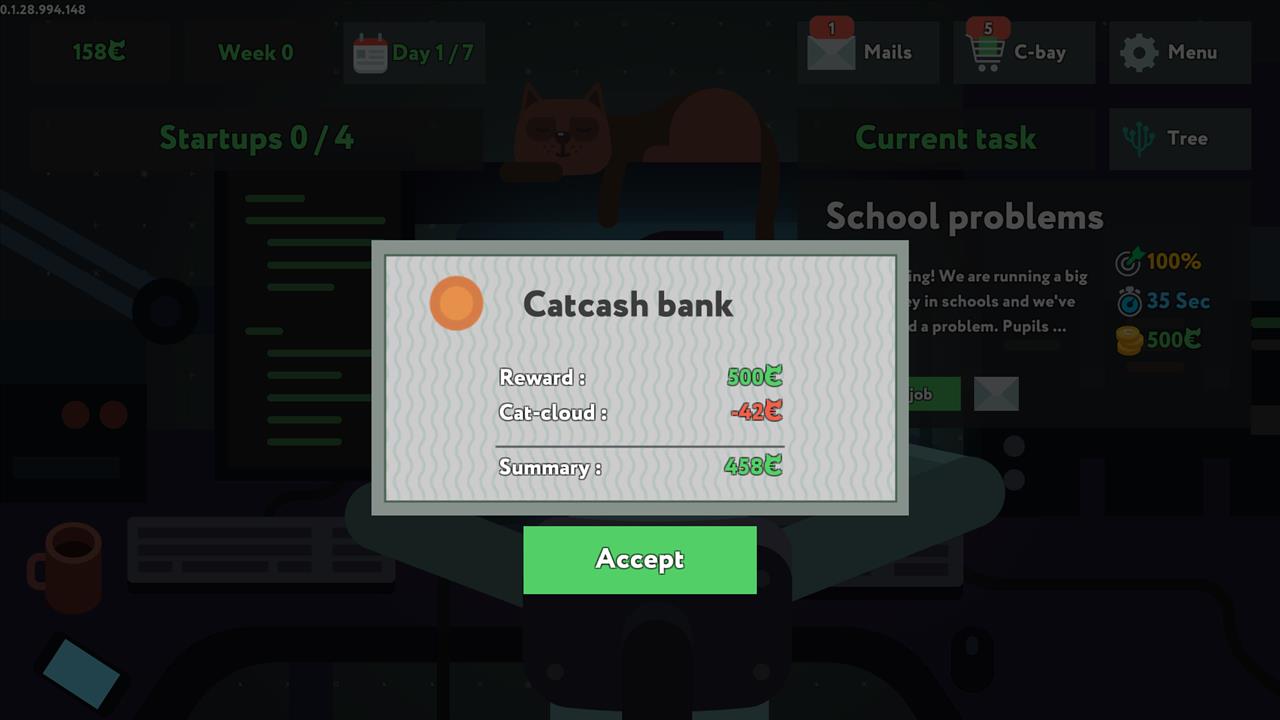

Once the components are trained and test runs are working, you can deploy the system. That means running it against the cloud server. The server charges a small ‘per operation’ fee, which naturally grows into considerable money. The money spent on the deployment run is subtracted from the contract amount, and you keep the difference.

And so it goes, right up until you complete the game or run shy of brainpower. I fell into the latter group, but that is not to say that I didn’t enjoy the game. It’s attractive, well designed, and although the stories behind the contracts were uninspiring, the inclusion of real world business constraints gave it a strong feeling of reality. The difficulty curve ramped up quickly, or perhaps it simply exceeded my limited capacities, but when it came to the point where both accuracy and performance were equally paramount, my brain crumpled into a tight little ball and refused to help. Again, I place no blame on the game - the discussion board is populated with people that didn’t get stuck until level 33, so it clearly isn’t impossible to figure out a way to solve the contracts that I reneged on. It just takes a different kind of thinking than mine.

* The product in this article was sent to us by the developer/company.

About Author

I've been fascinated with video games and computers for as long as I can remember. It was always a treat to get dragged to the mall with my parents because I'd get to play for a few minutes on the Atari 2600. I partially blame Asteroids, the crack cocaine of arcade games, for my low GPA in college which eventually led me to temporarily ditch academics and join the USAF to "see the world." The rest of the blame goes to my passion for all things aviation, and the opportunity to work on work on the truly awesome SR-71 Blackbird sealed the deal.

My first computer was a TRS-80 Model 1 that I bought in 1977 when they first came out. At that time you had to order them through a Radio Shack store - Tandy didn't think they'd sell enough to justify stocking them in the retail stores. My favorite game then was the SubLogic Flight Simulator, which was the great Grandaddy of the Microsoft flight sims.

While I was in the military, I bought a Commodore 64. From there I moved on up through the PC line, always buying just enough machine to support the latest version of the flight sims. I never really paid much attention to consoles until the Dreamcast came out. I now have an Xbox for my console games, and a 1ghz Celeron with a GeForce4 for graphics. Being married and having a very expensive toy (my airplane) means I don't get to spend a lot of money on the lastest/greatest PC and console hardware.

My interests these days are primarily auto racing and flying sims on the PC. I'm too old and slow to do well at the FPS twitchers or fighting games, but I do enjoy online Rainbow 6 or the like now and then, although I had to give up Americas Army due to my complete inability to discern friend from foe. I have the Xbox mostly to play games with my daughter and for the sports games.

View Profile